| << Chapter < Page | Chapter >> Page > |

As we will see later in this course , there now exist a wide variety of approaches to recover a sparse signal from a small number of linear measurements. We begin by considering a natural first approach to the problem of sparse recovery.

Given measurements and the knowledge that our original signal is sparse or compressible , it is natural to attempt to recover by solving an optimization problem of the form

where ensures that is consistent with the measurements . Recall that simply counts the number of nonzero entries in , so [link] simply seeks out the sparsest signal consistent with the observed measurements. For example, if our measurements are exact and noise-free, then we can set . When the measurements have been contaminated with a small amount of bounded noise, we could instead set . In both cases, [link] finds the sparsest that is consistent with the measurements .

Note that in [link] we are inherently assuming that itself is sparse. In the more common setting where , we can easily modify the approach and instead consider

where or . By setting we see that [link] and [link] are essentially identical. Moreover, as noted in "Matrices that satisfy the RIP" , in many cases the introduction of does not significantly complicate the construction of matrices such that will satisfy the desired properties. Thus, for most of the remainder of this course we will restrict our attention to the case where . It is important to note, however, that this restriction does impose certain limits in our analysis when is a general dictionary and not an orthonormal basis. For example, in this case , and thus a bound on cannot directly be translated into a bound on , which is often the metric of interest.

Although it is possible to analyze the performance of [link] under the appropriate assumptions on , we do not pursue this strategy since the objective function is nonconvex, and hence [link] is potentially very difficult to solve. In fact, one can show that for a general matrix , even finding a solution that approximates the true minimum is NP-hard. One avenue for translating this problem into something more tractable is to replace with its convex approximation . Specifically, we consider

Provided that is convex, [link] is computationally feasible. In fact, when , the resulting problem can be posed as a linear program [link] .

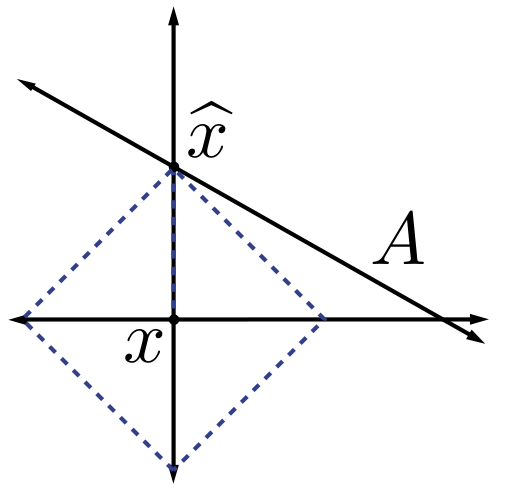

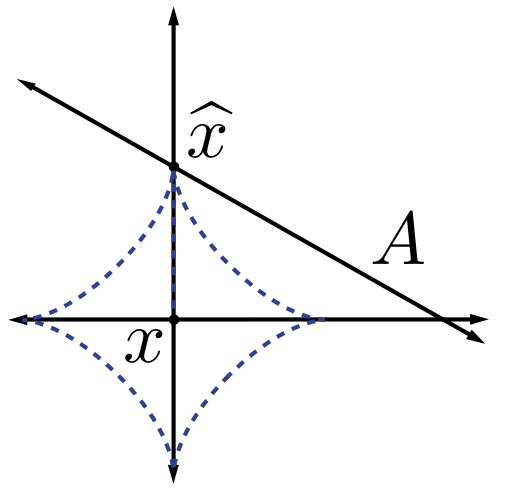

It is clear that replacing [link] with [link] transforms a computationally intractable problem into a tractable one, but it may not be immediately obvious that the solution to [link] will be at all similar to the solution to [link] . However, there are certainly intuitive reasons to expect that the use of minimization will indeed promote sparsity. As an example, recall the example we discussed earlier shown in [link] . In this case the solutions to the minimization problem coincided exactly with the solution to the minimization problem for any , and notably, is sparse. Moreover, the use of minimization to promote or exploit sparsity has a long history, dating back at least to the work of Beurling on Fourier transform extrapolation from partial observations [link] .

Additionally, in a somewhat different context, in 1965 Logan [link] showed that a bandlimited signal can be perfectly recovered in the presence of arbitrary corruptions on a small interval. Again, the recovery method consists of searching for the bandlimited signal that is closest to the observed signal in the norm. This can be viewed as further validation of the intuition gained from [link] — the norm is well-suited to sparse errors.

Historically, the use of minimization on large problems finally became practical with the explosion of computing power in the late 1970's and early 1980's. In one of its first applications, it was demonstrated that geophysical signals consisting of spike trains could be recovered from only the high-frequency components of these signals by exploiting minimization [link] , [link] , [link] . Finally, in the 1990's there was renewed interest in these approaches within the signal processing community for the purpose of finding sparse approximations to signals and images when represented in overcomplete dictionaries or unions of bases [link] , [link] . Separately, minimization received significant attention in the statistics literature as a method for variable selection in linear regression , known as the Lasso [link] .

Thus, there are a variety of reasons to suspect that minimization will provide an accurate method for sparse signal recovery. More importantly, this also provides a computationally tractable approach to the sparse signal recovery problem. We now provide an overview of minimization in both the noise-free and noisy settings from a theoretical perspective. We will then further discuss algorithms for performing minimization later in this course .

Notification Switch

Would you like to follow the 'An introduction to compressive sensing' conversation and receive update notifications?