| << Chapter < Page | Chapter >> Page > |

Orthogonality. When the angle between two vectors is , we say that the vectors are orthogonal . A quick look at the definition of angle ( Equation 12 from "Linear Algebra: Direction Cosines" ) leads to this equivalent definition for orthogonality:

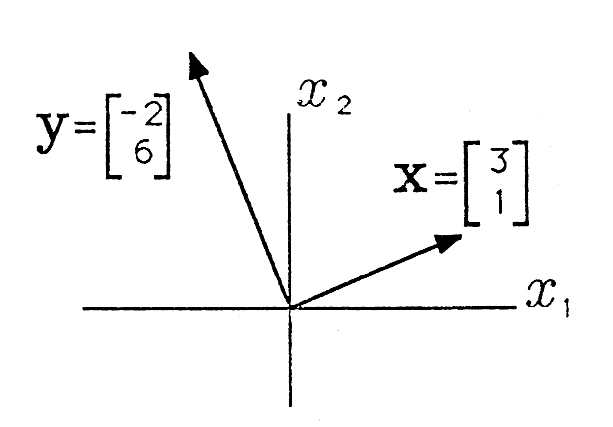

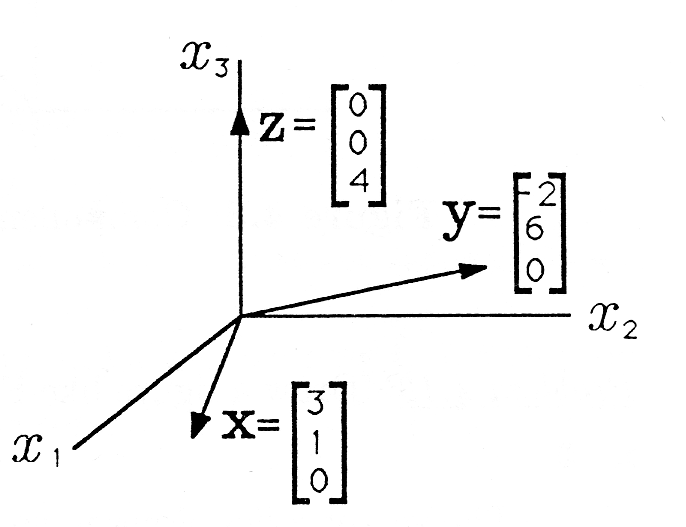

For example, in Figure 1(a) , the vectors and are clearly orthogonal, and their inner product is zero:

In Figure 1(b) , the vectors , and are mutually orthogonal, and the inner product between each pair is zero:

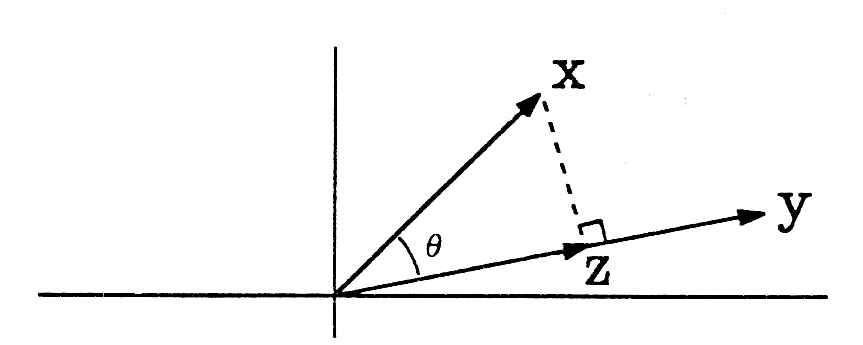

We can use the inner product to find the projection of one vector onto another as illustrated in Figure 2 . Geometrically we find the projection of onto by dropping a perpendicular from the head of onto the line containing . The perpendicular is the dashed line in the figure. The point where the perpendicular intersects (or an extension of ) is the projection of onto , or the component of along . Let's call it .

The vector lies along , so we may write it as the product of its norm and its direction vector :

But what is norm ? From Figure 2 we see that the vector is just , plus a vector that is orthogonal to :

Therefore we may write the inner product between and as

But because and both lie along , we may write the inner product as

From this equation we may solve for and substitute into Equation 6 to write as

Equation 10 is what we wanted–an expression for the projection of onto in terms of and .

Orthogonal Decomposition. You already know how to decompose a vector in terms of the unit coordinate vectors,

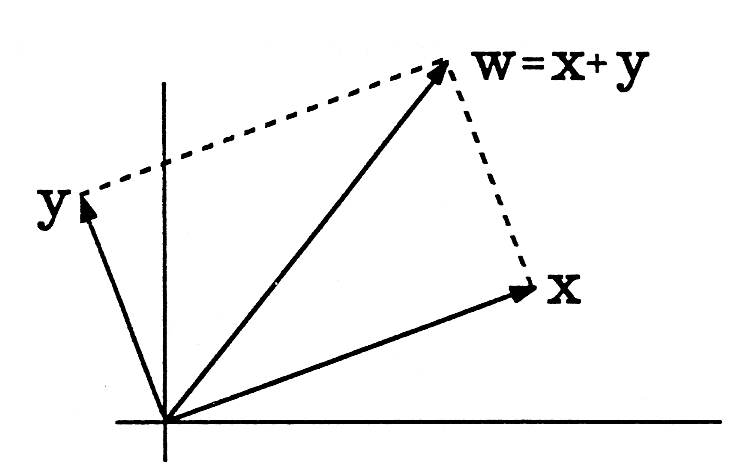

In this equation, is the component of along , or the projection of onto , but the set of unit coordinate vectors is not the only possible basis for decomposing a vector. Let's consider an arbitrary pair of orthogonalvectors and :

The sum of and produces a new vector , illustrated in Figure 3 , where we have used a two-dimensional drawing to represent dimensions. The norm squared of is

This is the Pythagorean theorem in dimensions! The length squared of is just the sum of the squares of the lengths of its two orthogonal components.

The projection of onto is , and the projection of onto is :

If we scale by to produce the vector , the orthogonal decomposition of is

Let's turn this argument around. Instead of building from orthogonal vectors and , let's begin with arbitrary and and see whether we can compute an orthogonal decomposition. The projection of onto is found from Equation 10 :

But there must be another component of such that is equal to the sum of the components. Let's call the unknown component . Then

Now, since we know and already, we find to be

Interestingly, the way we have decomposed will always produce and orthogonal to each other. Let's check this:

To summarize, we have taken two arbitrary vectors, and , and decomposed into a component in the direction of and a component orthogonal to .

Notification Switch

Would you like to follow the 'A first course in electrical and computer engineering' conversation and receive update notifications?